targeted backdoor attacks on deep learning systems using data poisoning

|

Targeted Backdoor Attacks on Deep Learning Systems Using Data

15 déc. 2017 That is the attacker injects poisoning samples into the training set to achieve his adversarial goal. Different from all existing work |

|

Backdoor Attacks on the DNN Interpretation System

Chen X.; Liu |

|

Backdoor Attacks Against Deep Learning Systems in the Physical

unanswered: “can backdoor attacks succeed using physi- ject) and can poison data from all classes. ... jyt) are poisoned data-target label pairs. |

|

STRIP: A Defence Against Trojan Attacks on Deep Neural Networks

Chen X. |

|

BadNL: Backdoor Attacks against NLP Models with Semantic

Badnets: Identifying vulnerabilities in the machine learning model supply chain Targeted backdoor attacks on deep learning systems using data poisoning. |

|

Dataset Security for Machine Learning: Data Poisoning Backdoor

31 mars 2021 An example of a targeted poisoning attack is Venomave (Aghakhani et al. 2020) which attacks automatic speech recognition systems to alter the ... |

|

Backdoor Attacks on Image Classification Models in Deep Neural

the target label. Third in terms of concealment |

|

A Robust Countermeasures for Poisoning Attacks on Deep Neural

1 août 2022 attacks poisoning attacks |

|

Can You Really Backdoor Federated Learning?

2 déc. 2019 Targeted backdoor attacks on deep learning systems using data poisoning. arXiv preprint arXiv:1712.05526 2017. |

|

CLEAR: Clean-Up Sample-Targeted Backdoor in Neural Networks

The data poisoning attack has raised serious security concerns on the safety of deep neural networks since it can lead to neural backdoor that |

|

Targeted Backdoor Attacks on Deep Learning Systems Using

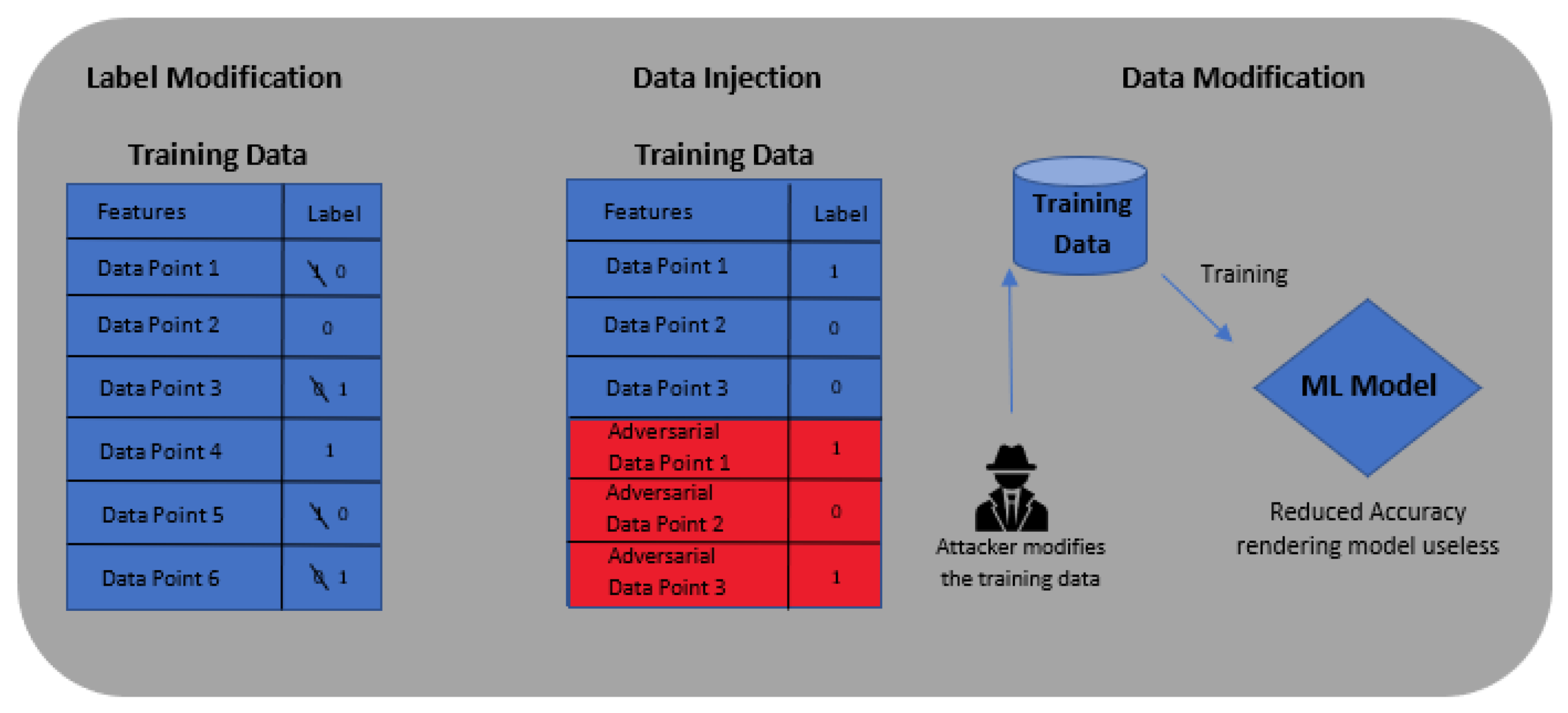

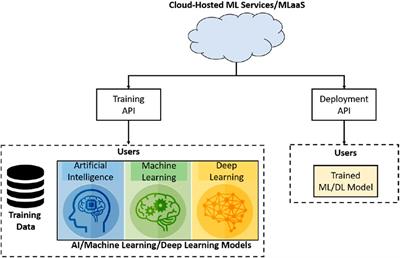

In this work we study data poisoning strategies to perform backdoor attacks and thus refer to them as backdoor poisoning attacks In particular we consider the attacker who conducts an attack by adding a few poisoning samples into the training dataset without directly accessing the victim learning system |

Is backdoor poisoning a threat to deep learning?

Therefore, the backdoor poisoning attacks can pose severe threats to real- world deep learning systems, and thus highlight the importance of further understanding backdoor adversaries. A.

What are data poisoning attacks?

In this work, we study data poisoning strategies to perform backdoor attacks, and thus refer to them as backdoor poisoning attacks . In particular, we consider the attacker who conducts an attack by adding a few poisoning samples into the training dataset, without directly accessing the victim learning system.

Are backdoor poisoning strategies effective?

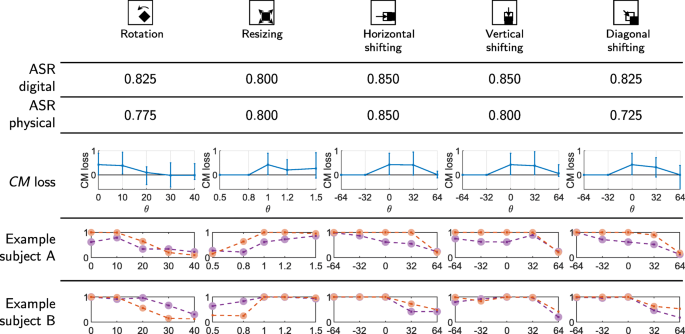

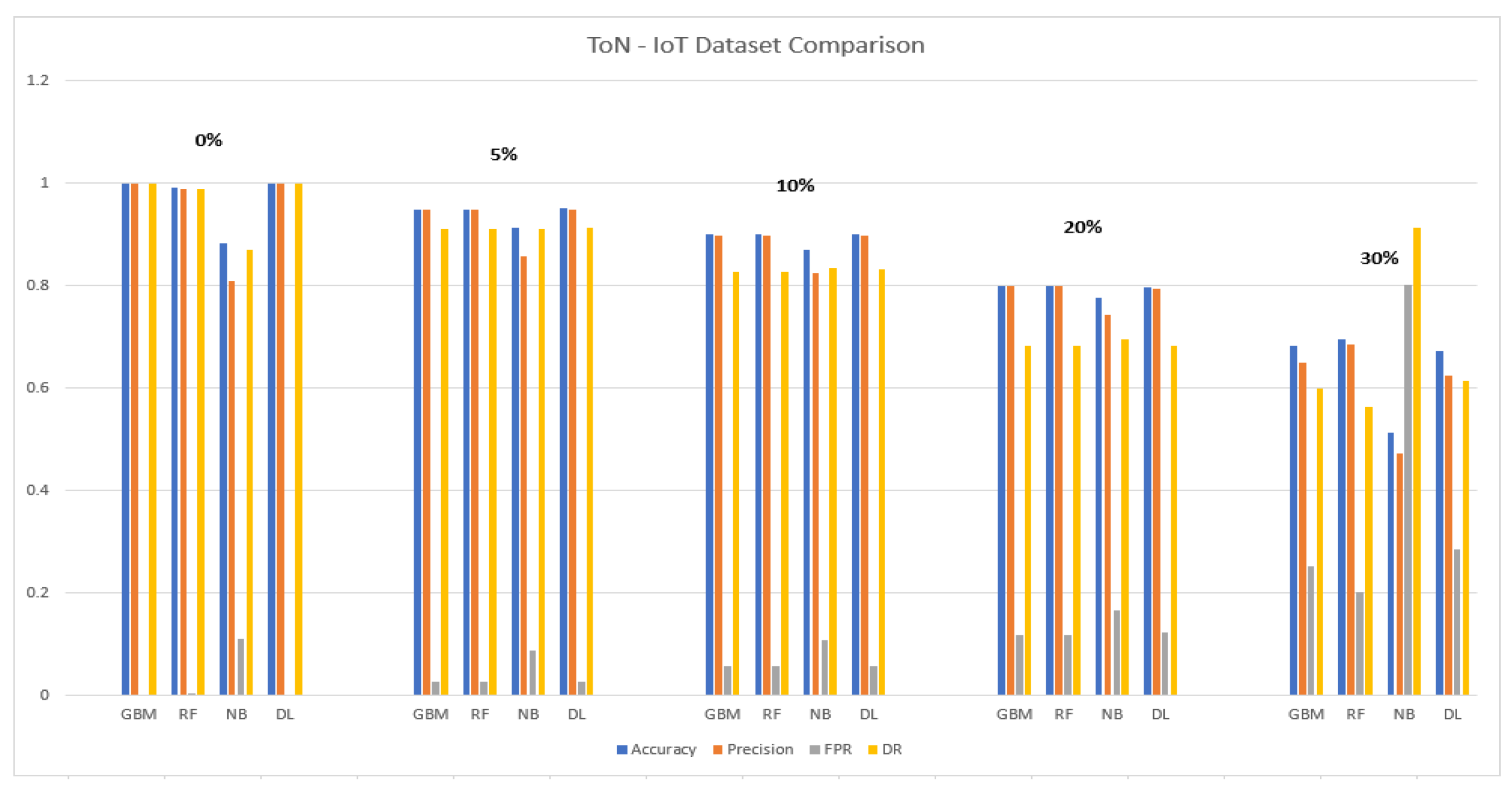

EVALUATION OFBACKDOORPOISONINGATTACKS In this section, we evaluate our backdoor poisoning strate- gies against the state-of-the-art deep learning models, using face recognition systems as a case study. Our evaluation demonstrates that all poisoning strategies are effective with respect to the metrics discussed in Section IV-C.

How do you do a backdoor attack on a learning system?

Backdoor Adversary Using Data Poisoning In general, there are several ways to instantiate backdoor attacks against learning systems. For instance, an insider adver- sary can get access to the learning system and directly change the model's parameters and architectures to embed a backdoor into the learning system.

|

Detecting Backdoor Attacks on Deep Neural Networks by Activation

safety of systems using such models has become an increas- ing concern In particular, ML models are often trained on data from potentially untrustworthy sources, providing adver- saries with the tack generates a backdoor or trojan in a deep neural network while these methods may work for poisoning attacks aimed |

|

Systematic Evaluation of Backdoor Data Poisoning Attacks on Image

safety risk for machine learning (ML) systems through data poisoning can be rendered ineffective after just Targeted backdoor attacks on deep learn- |

|

Neural Backdoors in NLP - Stanford University

introduces security vulnerabilities via backdoor attacks on machine learning models [10]) in order to gain unauthorized access to some other system data poisoning attacks because the former generally has a specific targeted output, and |

|

Evaluation of Backdoor Attacks on Deep Reinforcement Learning

on the assumption of backdoors being targeted We evaluated attacks on deep learning systems using data poisoning,” arXiv preprint arXiv:1712 05526 |

|

Spectral Signatures in Backdoor Attacks - NIPS Proceedings - NeurIPS

Another line of work on data poisoning deal with attacks that are meant to degrade the model's Targeted backdoor attacks on deep learning systems using |

|

Identifying and Mitigating Backdoor Attacks in Neural Networks

in deep neural networks (DNNs) make them susceptible to backdoor attacks, where hidden target label, when the associated trigger is applied to inputs backdoor attacks on deep learning systems using data poisoning,” arXiv preprint |

|

You Autocomplete Me: Poisoning Vulnerabilities in Neural - USENIX

We quantify the efficacy of targeted and untargeted data- and model-poisoning type of attacks on machine learning models, crafted to affect Code completion systems based on however, such a backdoor may be noticed and removed In |

![PDF] Targeted Backdoor Attacks on Deep Learning Systems Using Data PDF] Targeted Backdoor Attacks on Deep Learning Systems Using Data](https://d3i71xaburhd42.cloudfront.net/cb4c2a2d7e50667914d1a648f1a9134056724780/11-Figure11-1.png)

![PDF] Targeted Backdoor Attacks on Deep Learning Systems Using Data PDF] Targeted Backdoor Attacks on Deep Learning Systems Using Data](https://d3i71xaburhd42.cloudfront.net/cb4c2a2d7e50667914d1a648f1a9134056724780/11-Figure10-1.png)

![PDF] Targeted Backdoor Attacks on Deep Learning Systems Using Data PDF] Targeted Backdoor Attacks on Deep Learning Systems Using Data](https://d3i71xaburhd42.cloudfront.net/cb4c2a2d7e50667914d1a648f1a9134056724780/4-TableI-1.png)

![PDF] Targeted Backdoor Attacks on Deep Learning Systems Using Data PDF] Targeted Backdoor Attacks on Deep Learning Systems Using Data](https://d3i71xaburhd42.cloudfront.net/cb4c2a2d7e50667914d1a648f1a9134056724780/10-Figure8-1.png)

![PDF] Targeted Backdoor Attacks on Deep Learning Systems Using Data PDF] Targeted Backdoor Attacks on Deep Learning Systems Using Data](https://d3i71xaburhd42.cloudfront.net/cb4c2a2d7e50667914d1a648f1a9134056724780/18-Figure19-1.png)

![PDF] Targeted Backdoor Attacks on Deep Learning Systems Using Data PDF] Targeted Backdoor Attacks on Deep Learning Systems Using Data](https://d3i71xaburhd42.cloudfront.net/cb4c2a2d7e50667914d1a648f1a9134056724780/18-Figure18-1.png)

![PDF] Targeted Backdoor Attacks on Deep Learning Systems Using Data PDF] Targeted Backdoor Attacks on Deep Learning Systems Using Data](https://images.deepai.org/publication-preview/data-security-for-machine-learning-data-poisoning-backdoor-attacks-and-defenses-page-1-thumb.jpg)

![PDF] Targeted Backdoor Attacks on Deep Learning Systems Using Data PDF] Targeted Backdoor Attacks on Deep Learning Systems Using Data](https://images.deepai.org/publication-preview/reflection-backdoor-a-natural-backdoor-attack-on-deep-neural-networks-page-2-medium.jpg)

![PDF] Model Agnostic Defence against Backdoor Attacks in Machine PDF] Model Agnostic Defence against Backdoor Attacks in Machine](https://i1.rgstatic.net/publication/336947655_Poisoning_Attack_in_Federated_Learning_using_Generative_Adversarial_Nets/links/5dd3a5f1a6fdcc7e138d3dab/largepreview.png)

![PDF] Targeted Backdoor Attacks on Deep Learning Systems Using Data PDF] Targeted Backdoor Attacks on Deep Learning Systems Using Data](https://www.frontiersin.org/files/Articles/544373/fdata-03-00023-HTML/image_m/fdata-03-00023-g007.jpg)