batch size epoch learning rate

What is number of epochs in deep learning?

The number of epochs is a hyperparameter of gradient descent that controls the number of complete passes through the training dataset. Kick-start your project with my new book Deep Learning With Python, including step-by-step tutorials and the Python source code files for all examples. Let’s get started.

What is the difference between batch size and number of epochs?

The batch size is a hyperparameter of gradient descent that controls the number of training samples to work through before the model’s internal parameters are updated. The number of epochs is a hyperparameter of gradient descent that controls the number of complete passes through the training dataset.

How many iterations does it take to complete a training epoch?

Let’s say we have 2000 training examples that we are going to use . We can divide the dataset of 2000 examples into batches of 500 then it will take 4 iterations to complete 1 epoch. Where Batch Size is 500 and Iterations is 4, for 1 complete epoch. Follow me on Medium to get similar posts.

What is batch size in neural network training?

Batch size defines the number of samples we use in one epoch to train a neural network. There are three types of gradient descent in respect to the batch size: Batch gradient descent – uses all samples from the training set in one epoch. Stochastic gradient descent – uses only one random sample from the training set in one epoch.

Overview

This post is divided into five parts; they are: 1. Stochastic Gradient Descent 2. What Is a Sample? 3. What Is a Batch? 4. What Is an Epoch? 5. What Is the Difference Between Batch and Epoch? machinelearningmastery.com

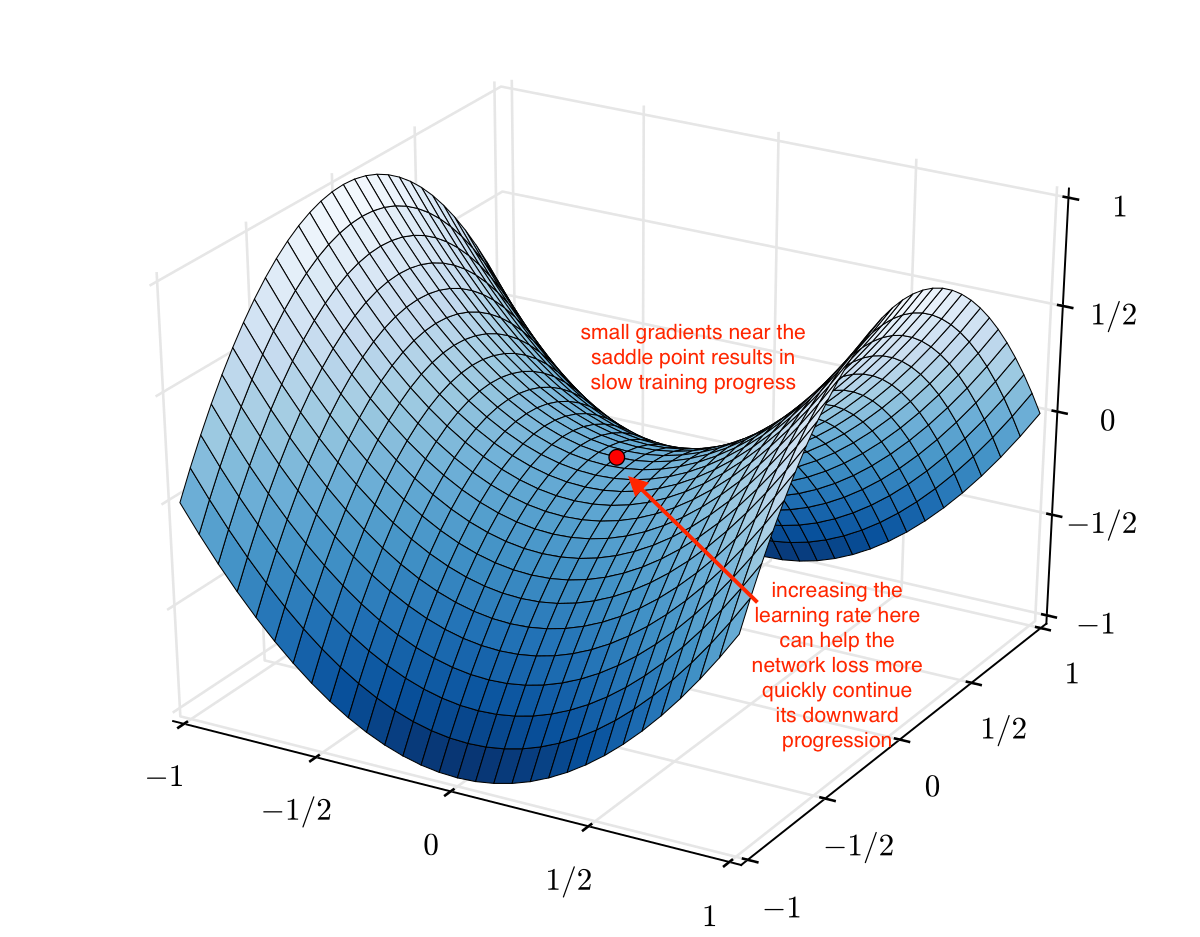

Stochastic Gradient Descent

Stochastic Gradient Descent, or SGD for short, is an optimization algorithm used to train machine learning algorithms, most notably artificial neural networks used in deep learning. The job of the algorithm is to find a set of internal model parameters that perform well against some performance measure such as logarithmic loss or mean squared error

What Is A sample?

A sample is a single row of data. It contains inputs that are fed into the algorithm and an output that is used to compare to the prediction and calculate an error. A training dataset is comprised of many rows of data, e.g. many samples. A sample may also be called an instance, an observation, an input vector, or a feature vector. Now that we know

What Is A Batch?

The batch size is a hyperparameter that defines the number of samples to work through before updating the internal model parameters. Think of a batch as a for-loop iterating over one or more samples and making predictions. At the end of the batch, the predictions are compared to the expected output variables and an error is calculated. From this er

What Is An Epoch?

The number of epochs is a hyperparameter that defines the number times that the learning algorithm will work through the entire training dataset. One epoch means that each sample in the training dataset has had an opportunity to update the internal model parameters. An epoch is comprised of one or more batches. For example, as above, an epoch that

What Is The Difference Between Batch and Epoch?

The batch size is a number of samples processed before the model is updated. The number of epochs is the number of complete passes through the training dataset. The size of a batch must be more than or equal to one and less than or equal to the number of samples in the training dataset. The number of epochs can be set to an integer value between on

Further Reading

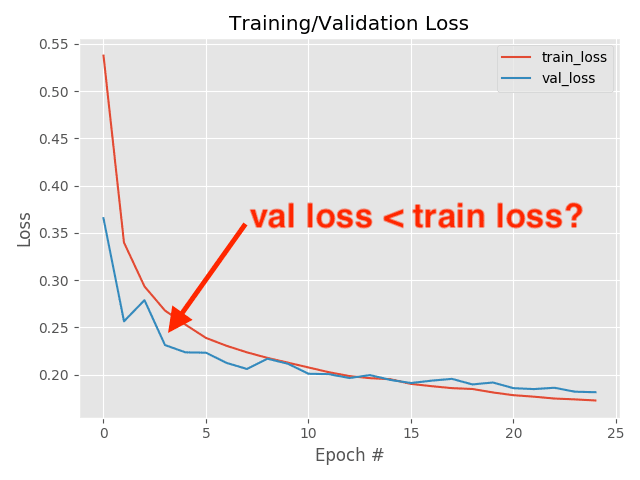

This section provides more resources on the topic if you are looking to go deeper. 1. Gradient Descent For Machine Learning 2. How to Control the Speed and Stability of Training Neural Networks Batch Size 3. A Gentle Introduction to Mini-Batch Gradient Descent and How to Configure Batch Size 4. A Gentle Introduction to Learning Curves for Diagnosin

Summary

In this post, you discovered the difference between batches and epochs in stochastic gradient descent. Specifically, you learned: 1. Stochastic gradient descent is an iterative learning algorithm that uses a training dataset to update a model. 2. The batch size is a hyperparameter of gradient descent that controls the number of training samples to

|

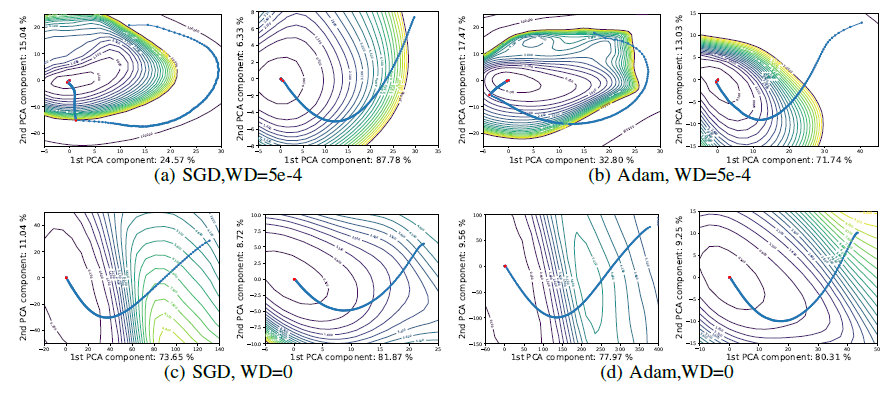

DONT DECAY THE LEARNING RATE INCREASE THE BATCH SIZE

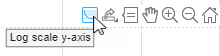

It reaches equivalent test accuracies after the same number of training epochs but with fewer parameter updates |

|

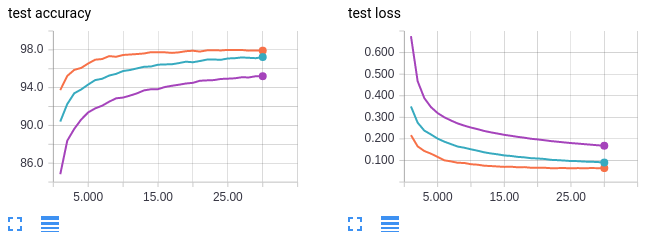

Scaling SGD Batch Size to 32K for ImageNet Training

Table 1: ImageNet Dataset by ResNet50 Model with poly learning rate (LR) rule. Batch Size Base LR power momentum weight decay Epochs Peak Test Accuracy. |

|

ADABATCH: ADAPTIVE BATCH SIZES FOR TRAINING DEEP

iterations are required for one epoch of training (i.e. one pass over the data). After an epoch of training with a learning rate ? and batch size r |

|

AdaBatch: Adaptive Batch Sizes for Training Deep Neural Networks

14 Feb 2018 requires careful choice of both learning rate and batch size. While smaller batch sizes generally converge in fewer training epochs ... |

|

Analyzing Performance of Deep Learning Techniques for Web

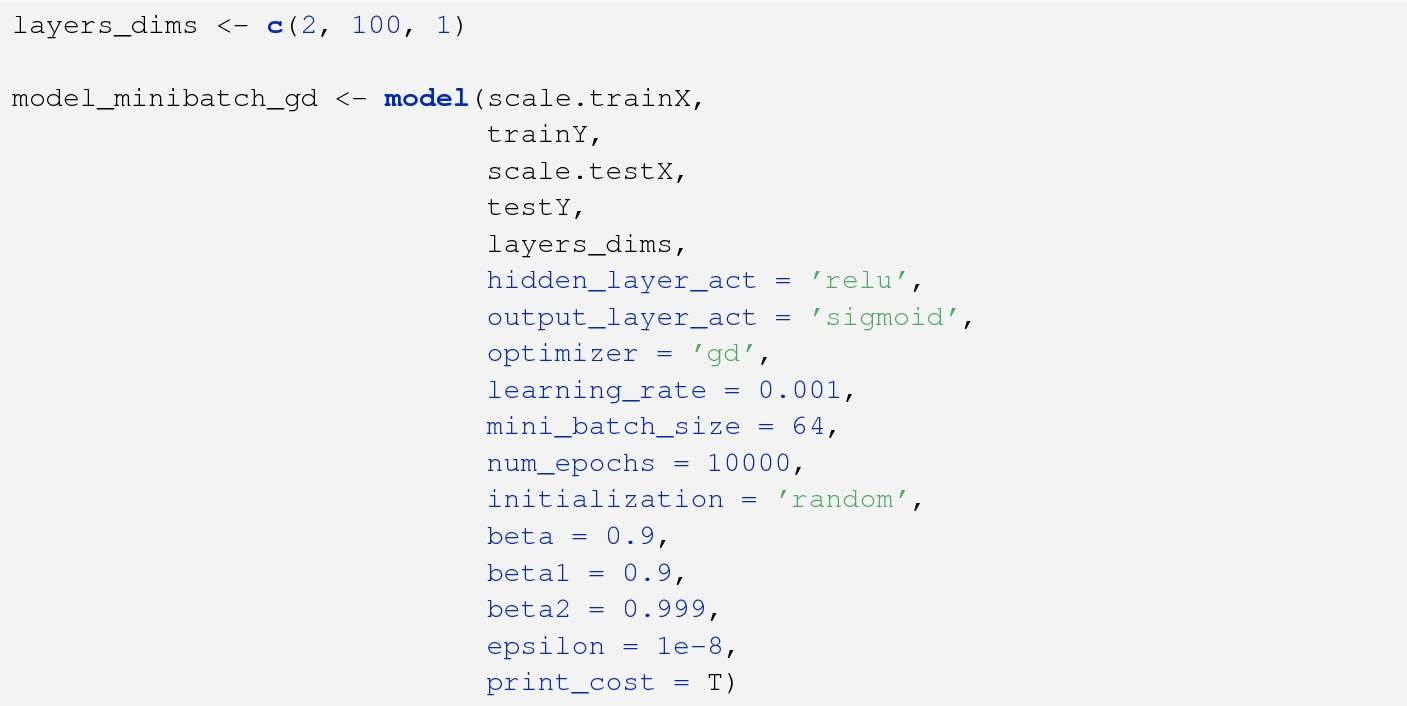

that have been configured are number of hidden units activation function |

| Effect of Hyper-Parameter Optimization on the Deep Learning Model |

|

The Limit of the Batch Size

15 Jun 2020 After trying various different optimization techniques we find only LAMB optimizer with extremely long learning rate warmup epochs and ... |

|

HYPERPARAMETER TUNING AND IMPLICIT REGULARIZATION IN

are independent of batch size under a constant epoch budget. In the curvature dominated regime the optimal learning rate is independent of batch size |

|

Large-Batch Training for LSTM and Beyond

14 Nov 2018 From 80th epoch to 90th epoch LEGW uses the constant learning rate of 0.001×22.5. When we scale the batch size from 1K to 2K |

|

Inefficiency of K-FAC for Large Batch Size Training

These two learning rate decays separate the train- ing process into three stages. Because training extends to a greater number of epochs for large batches under |

|

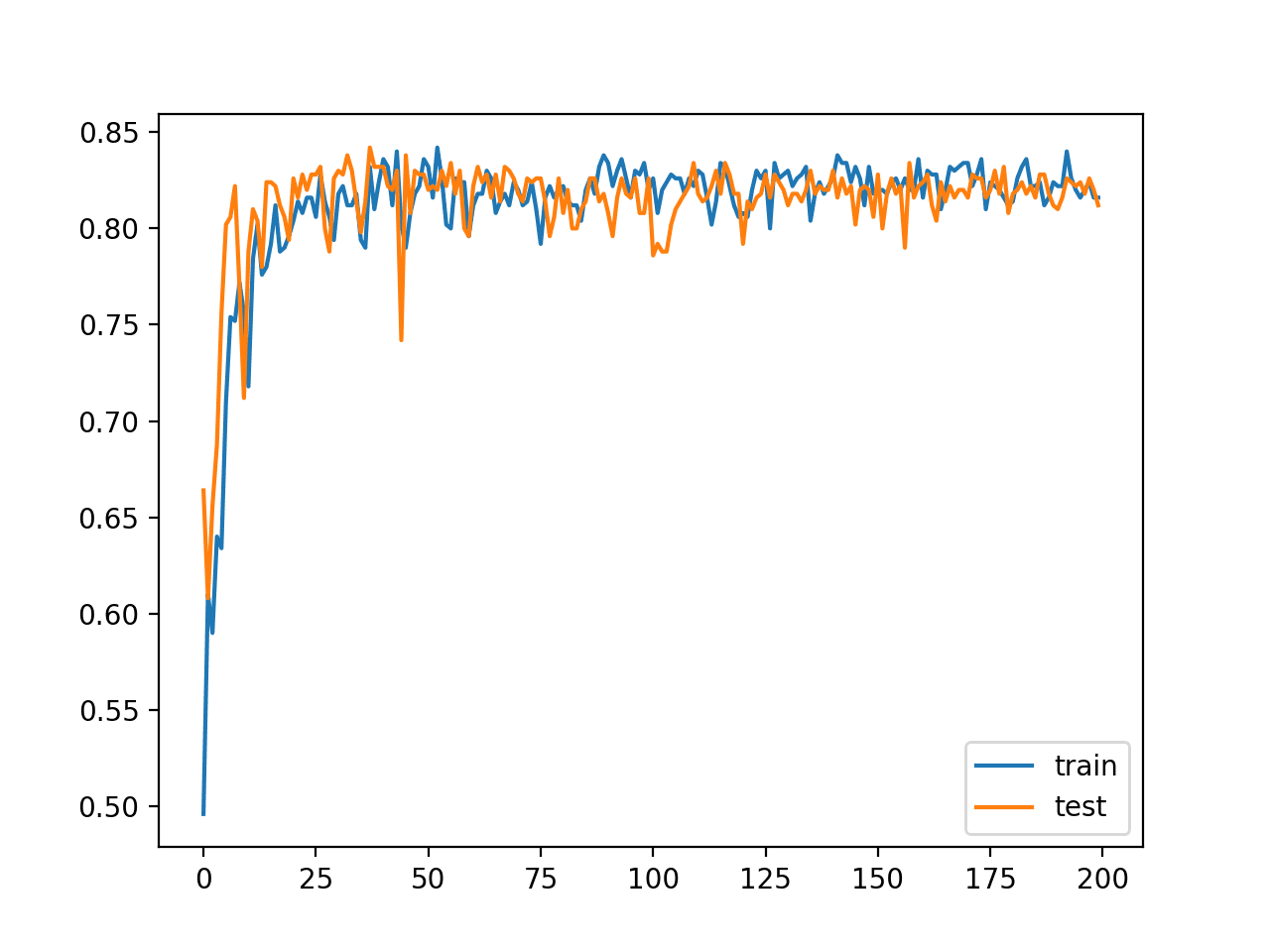

Control Batch Size and Learning Rate to Generalize Well - NeurIPS

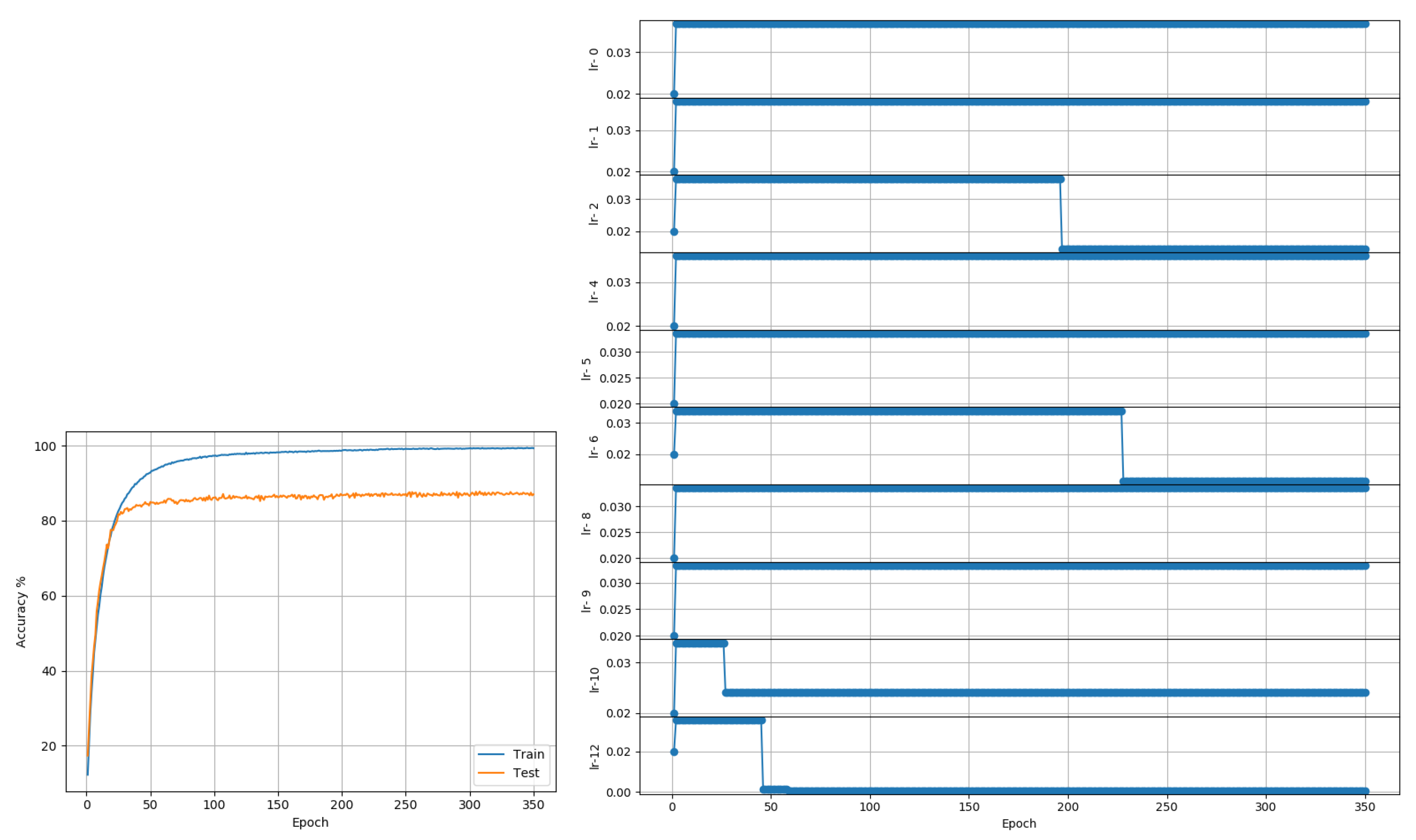

training strategy that we should control the ratio of batch size to learning rate not too large to The test accuracies of all 200 epochs are collected for analysis |

|

Analyzing Performance of Deep Learning - ScienceDirectcom

that have been configured are number of hidden units, activation function, optimization function, learning rate, number of epochs and batch size 2) The paper |

|

ONLINE BATCH SELECTION FOR FASTER TRAINING OF NEURAL

first bias-corrected momentum ˆmt, with the learning rates set according to the Online Batch Selection in AdaDelta, Batch Size 64 Epochs Training cost |

|

Training ImageNet in 1 Hour - Facebook Research

size and apply a simple warmup phase for the first few epochs of training All other batch ∪jBj of size kn and learning rate ˆη yields: ˆwt+1 = wt − ˆη 1 kn ∑ |

|

On the Generalization Benefit of Noise in Stochastic Gradient Descent

that in our constant step experiments, the epoch budget is proportional to the batch size, which ensures that all batch sizes decay the learning rate after the same |

|

On the Generalization Benefit of Noise in Stochastic Gradient Descent

sizes under a constant epoch budget (such that small batches are allowed to take learning rate and batch size (Krizhevsky, 2014; Goyal et al , 2017; Smith et |

|

The general inefficiency of batch training for gradient descent learning

gradient during the course of an epoch It can also handle any size of training set without having to reduce the learning rate Section 2 surveys the neural |

![Tip] Reduce the batch size to generalize your model - Deep Tip] Reduce the batch size to generalize your model - Deep](https://www.mdpi.com/entropy/entropy-22-00560/article_deploy/html/images/entropy-22-00560-g001.png)

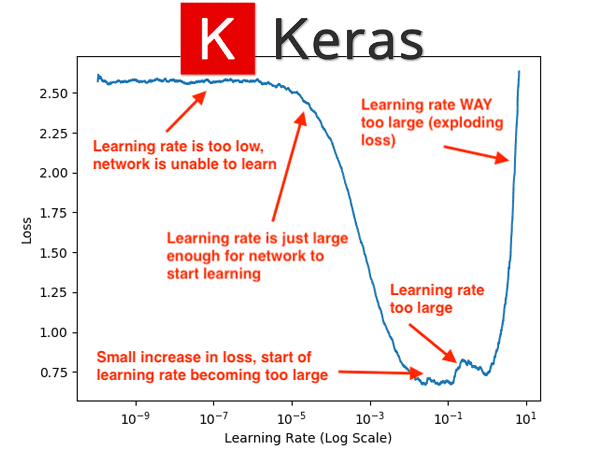

![PDF] AdaBatch: Adaptive Batch Sizes for Training Deep Neural PDF] AdaBatch: Adaptive Batch Sizes for Training Deep Neural](https://pyimagesearch.com/wp-content/uploads/2016/10/sgd_loss.jpg)

![PDF] AdaBatch: Adaptive Batch Sizes for Training Deep Neural PDF] AdaBatch: Adaptive Batch Sizes for Training Deep Neural](https://pyimagesearch.com/wp-content/uploads/2019/08/learing_rate_finder_lr_plot.png)

![PDF] AdaBatch: Adaptive Batch Sizes for Training Deep Neural PDF] AdaBatch: Adaptive Batch Sizes for Training Deep Neural](https://media.arxiv-vanity.com/render-output/4644545/rates_1b.png)